A Virtual Reality-Based Surgeon Design Interface for Patient Anatomy-Specific, Nonlinear Renal Access Using Concentric Tube Robots

Michael H. Hsieh, MD/PhD1, Tania K. Morimoto, BS2, Joseph D. Greer, BS2, Allison M. Okamura, PhD2.

1Children's National Medical Center, Washington, DC, USA, 2Stanford University, Stanford, CA, USA.

Background: Straight needle-based percutaneous renal access is sometimes not possible due to intervening tissues or patient body habitus. For example, access of the upper pole, often the best angle for nephrostolithotomy, can be hazardous because the pleura lies between the kidney and planned site of entry for the needle. Percutaneous access of the kidney can be particularly challenging in children due to their smaller anatomy. Although fluoroscopy and 2D ultrasound can help guide percutaneous renal access, these imaging modalities have significant downsides, including exposure to ionizing radiation and the inability to assess the 3D position of the needle, respectively. We propose to instead use 3D ultrasound to guide curved-path percutaneous access to the kidney through the development of novel, personalized concentric tube robots. These robots are composed of multiple telescoping, concentric, precurved, superelastic tubes that can be axially translated and rotated relative to one another to provide a curved, working channel for needle access. In order to achieve our goal we first sought to develop a virtual reality-based surgeon design interface.

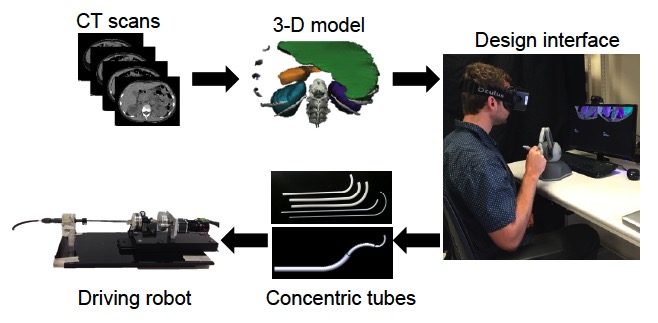

Methods: We built the design interface using Chai3D, an open source framework for haptics, visualization, and interactive real-time simulation. In order to provide the surgeon with an immersive experience, we integrated an Oculus Rift Development Kit 2 (virtual reality head-mounted display), a PHANTOM Omni (six degree-of-freedom haptic device), and a set of daVinci surgical system foot pedals. The overall workflow, as seen in Figure 1, begins with segmenting CT scans of an actual pediatric patient with a renal stone and reconstructing a 3D model of the anatomy of interest. This 3D model is then rendered in the virtual environment, where the surgeon can design a concentric tube robot specific for that patient. We developed four main modes to allow for an intuitive design process: Camera Mode (environment exploration), Initialization Mode (choosing skin site entry point), Design Mode (altering tube parameters), and Playback Mode (to watch a simulated procedure).

Results: We successfully developed a design interface that leverages the expertise of the surgeon by putting him or her in the design loop. We also demonstrate the feasibility of teaching a surgeon to use such an interface. A fellowship-trained pediatric urologist was given the task of designing a concentric tube robot to reach an upper pole kidney stone. After an introduction to the various features and interaction modes, the surgeon was able to design two different robots in less than six minutes and three minutes, respectively.

Conclusions: We propose to develop personalized concentric tube robots in order to safely access hard to reach places within the body. We built a design interface that allows the surgeon to be involved in the design process and demonstrate the feasibility of this approach. Our next steps include quantitative assessments of the output designs, followed by manufacturing and physical testing in geometrically accurate environments, and integration of 3D ultrasound guidance.

Back to 2016 Fall Congress