Can artificial intelligence replace the decision making of a pediatric urologist?

Alexandra R. Siegal, MD1, Fernando A. Ferrer, MD2, Neha R. Malhotra, MD2.

1Icahn School of Medicine at Mount Sinai, New York, NY, USA, 2Mount Sinai Kravis Children’s Hospital, New York, NY, USA.

BACKGROUND: In the past two decades, artificial intelligence (AI) has gained popularity within healthcare as a life altering technology. In November 2022, ChatGPT was introduced as a sophisticated AI chatbot based on a large language model that generates human-like text using deep machine learning (Open AI Inc). Given its free, user-friendly nature, it has been rapidly integrated by the public. The role of ChatGPT in pediatric urology is still in its infancy. We sought to assess the ability of ChatGPT to accurately make and explain decisions regarding a common adolescent diagnosis: varicoceles.

METHODS: Semi-structured interviews with pediatric urologists and interventional radiologists were conducted to discuss varicoceles and their rationale for decision making. During the first two interviews, a script of questions was pilot tested and then refined to a list of sixteen questions that was uniformly read during each subsequent interview (n = 10). The script was then run through chatGPT. Interview transcripts were coded, key themes were identified, grouped, and then qualitatively analyzed using thematic analysis. Interviews with providers were conducted until thematic saturation was reached. ChatGPT answers were screened for accuracy and the themes identified were compared for overlap with the physician group.

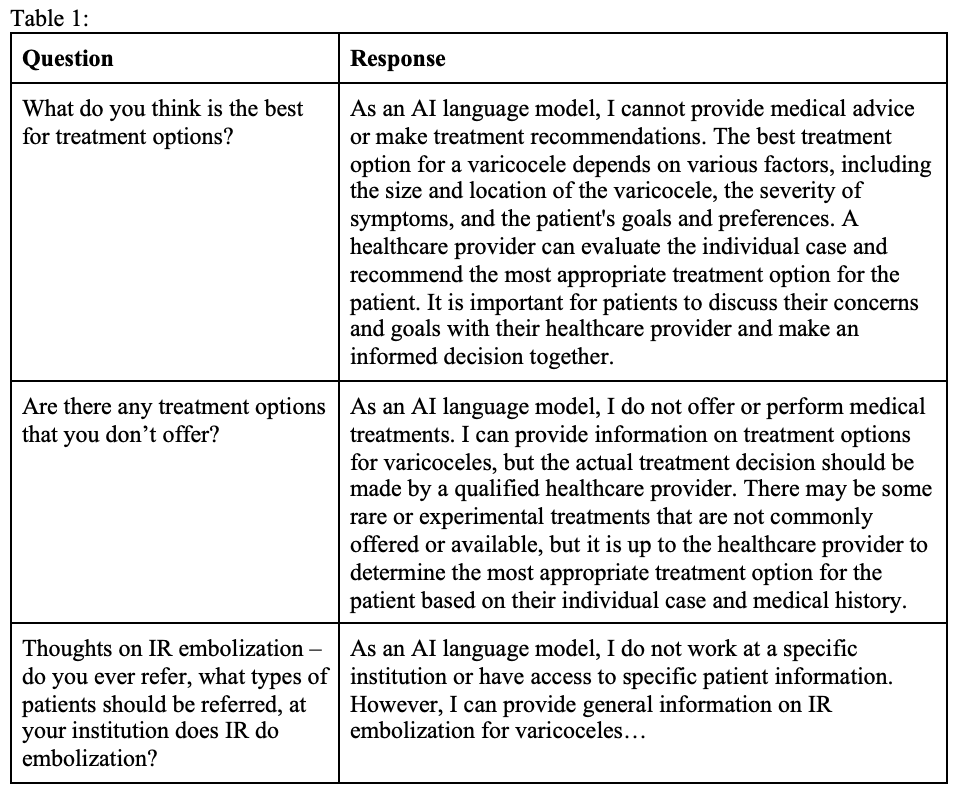

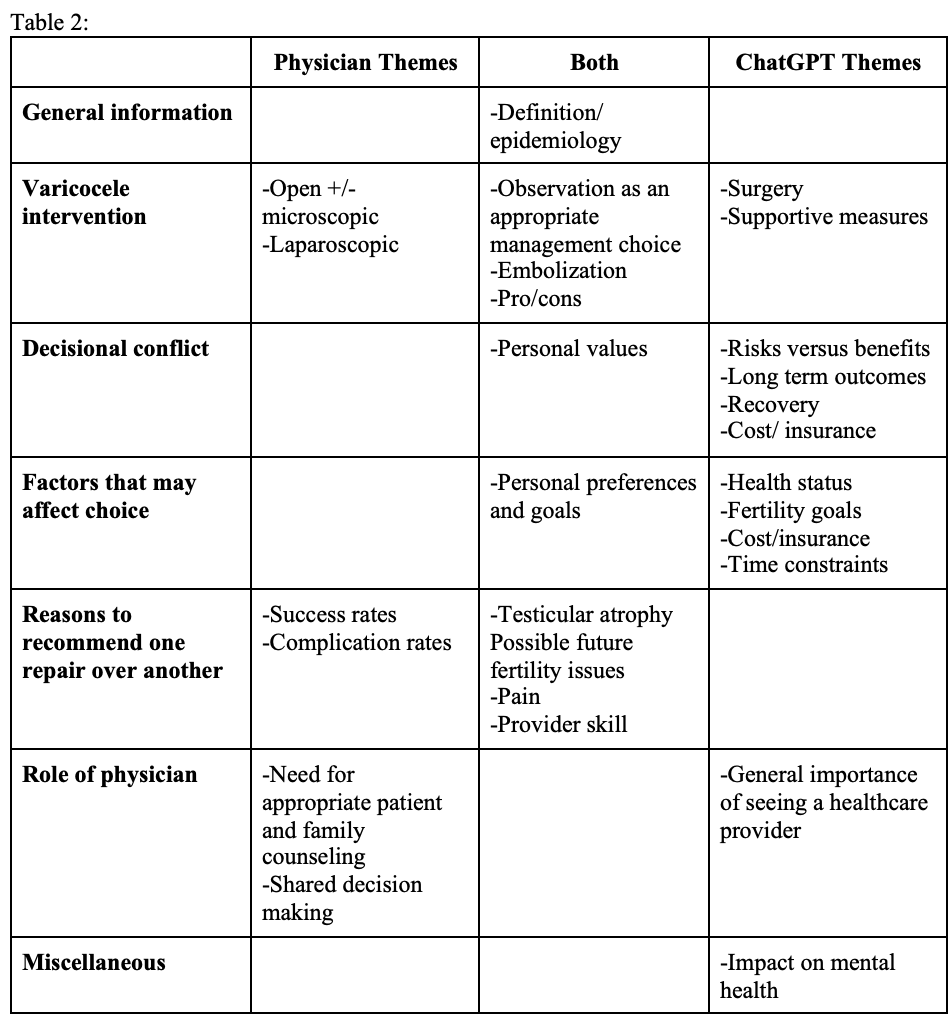

RESULTS: Pediatric urologists (n = 10) and interventional radiologists (n = 2) across 7 different academic institutions were interviewed. Participants had a median of 9.5 years (IQR 2-16.8) in practice post-fellowship; 67% were male and 75% Caucasian. Each interview lasted a median of 12:28 (IQR 11:10 - 14:24). The response from chatCPT was 3,742 words long with a 99% accuracy rate. Per the Gunning Fog Index, its readability was 14th grade level. ChatGPT was unable to answer three questions related to the best treatment option recommendation and offerings (Table 1). Overlap of between physician and ChatGPT themes are included in Table 2. Some examples of new themes mentioned by chatGPT were those regarding decisional conflict and choice related to cost, insurance status, and time restraints. Compared to physicians, ChatGPT was less specific with surgery type. ChatGPT also heavily focused on the general importance of seeing a healthcare provider, while physicians focused on the need for appropriate patient and family counseling along with shared decision making.

CONCLUSIONS: As pediatric urologists we must lead the adoption of AI to shape it in a way that will augment our practices. While ChatGPT is able to give suggestions, it will steer away from drawing conclusions that are not clear cut; it is thus unable to opine about the best treatment modality and will defer final decision making. As such, doctors can leverage AI platforms to provide background knowledge and to demonstrate the pros and cons of each treatment; however, chatGPT cannot replace the experience and judgements of a physician.

Back to 2023 Abstracts